Overfitting and underfitting are the root causes for poor model accuracy. Only by being able to determine whether a model is overfitting or underfitting can we take the correct approach to improve the performance of the model.

Table of Contents

Overfitting and Underfitting

When the model does not fit the training data well, we call it underfitting. It can also be said that the model has high bias. The underfitted model cannot accurately capture the training data, so the predicted data will not be accurate enough.

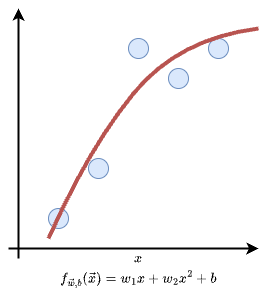

The figure below is an example of underfitting of the regression model. We can see that the model does not fit the training data well enough.

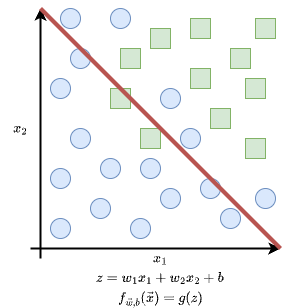

Classification models also have underfitting situations. As shown in the figure below, the model cannot classify the two objects well.

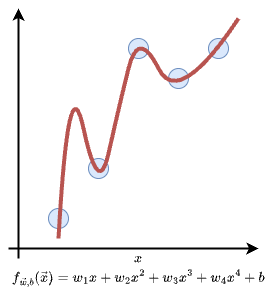

On the contrary, when the model fits the training data completely or very well, it is possible that when predicting new data, the error between the predicted value and the actual value will be very large. This is called overfitting, and it can also be said that the model has high variance. As shown in the figure below, the regression model accurately captures every training data, but becomes very inaccurate when predicting new data.

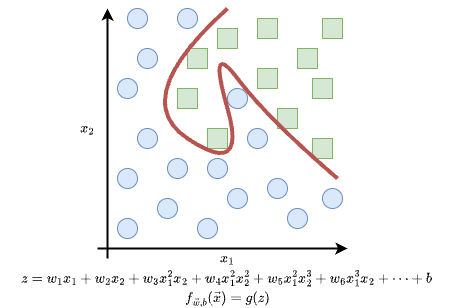

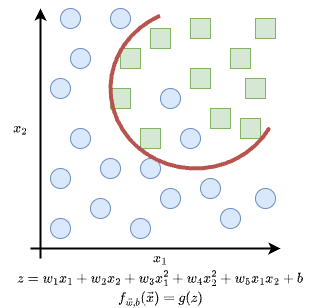

The figure below is an overfitting example of the classification model.

When the model fits the training data appropriately and does not fit completely, but the error is small, we call it generalization. It can also be said that the model has low bias and low variance. When such a model predicts new data, the error between the predicted value and the real value will be very small. This is the model we want to train.

The figure below is an example of generalization of the regression model. We can see that although the model does not capture every training data completely accurately, its error is small.

The figure below is an example of generalization of the classification model. We can see that the model completely classify the square object, although there are two prototype objects in it.

How to Solve Overfitting and Underfitting?

When overfitting occurs in the model, we can try to solve it in the following ways.

- Collect more training data. Using more training data to train the model will make the model smoother, thus solving the overfitting problem.

- Use fewer features. Using too many features in a model can make the model complex. This can lead to overfitting, as some features may simply be inappropriate data. Therefore, removing those inappropriate features to simplify the model can solve the overfitting problem.

- Use regularization to reduce the size of parameters.

However, when the model is underfitting, we can try to solve it in the following ways.

- Use more features to make the model more complex so that the model can better fit the training data.

For details on regularization, please refer to the following article.

Conclusion

After reading this article, you should probably understand overfitting and underfitting, and their importance. The solutions for overfitting and underfitting are different. Therefore, when the accuracy of a model is low, if we cannot distinguish whether it is overfitting or underfitting, we will not be able to adjust the model correctly.

References

- Andrew Ng, Machine Learning Specialization, Coursera.