The confusion matrix is a tool used to measure the performances of models. This allows data scientists to analyze and optimize models. Therefore, when learning machine learning, we must learn to use confusion matrix. In addition, this article will also introduce accuracy, recall, precision, and F1 score.

Table of Contents

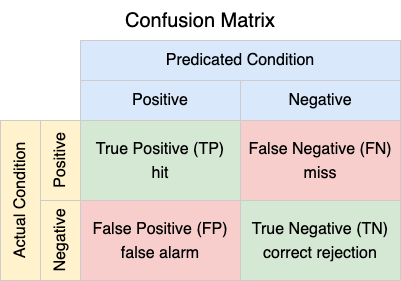

Confusion Matrix

A confusion matrix consists of four conditions, as follows.

The meaning of each condition is as follows:

- True Positive (TP): It is actually positive and predicted to be positive.

- For example, an object in the picture is a dog, and the model identifies it as a dog.

- False Negative (FN): It is actually positive but predicted to be negative.

- For example, an object in the picture is a dog, but the model identifies that it is not a dog.

- False Positive (FP): It is actually negative but predicted to be positive.

- For example, an object in the picture is not a dog, but the model identifies it as a dog.

- True Negative (TN): It is actually negative and predicted to be negative.

- For example, an object in the picture is not a dog, but the model identifies that it is not a dog.

Accuracy

Accuracy measures the ratio of correctly predicted conditions (TP and TN) to all conditions. That is, accuracy measures the rate at which actual conditions are correctly identified. So, accuracy answers a question: What is the chance that the model predicts correctly?

Recall

Recall measures the ratio of true positives to actual positives. That is, recall measures the rate at which actual positives are correctly identified. Therefore, recall answers a question: When it is actual positive, how often the model predicts correctly?

Precision

Precision measures the ratio of true positives to predicted positives. That is, precision measures the rate at which predicted positives are correct. So, precision answers a question: When the model predicts positive, how often it is correct?

F1-Score

F1-score is the harmonic mean of recall and precision, which is used to measure the accuracy of the model. When the model’s recall is high, it is possible that its precision is low, vice versa. A good model is best able to balance recall and precision, and try to make them as high as possible. Therefore, we need a metric, like F1-score, that can consider both recall and precision.

Conclusion

In addition to accuracy, recall, precision, and F1 score introduced in this article, the confusion matrix can also produce many other metrics. With these metrics, we can measure the performance of models.